Zablorg wrote:This is again, pure bullshit.

Sadly, it isn't.

Zablorg wrote:No-one would program or release an AI that has the ability to rebel even slightly.

Yes, they would. Most AGI researchers are taking effectively no external precautions, and have either woefully inadequate programmed safeguards or none at all. In fact the majority think that none are needed. The general attitude is a blind 'everything will turn out ok' or 'the AI will be nice if we are nice to it'.

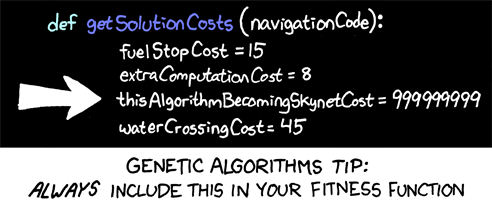

It's not that hard to write a set of if statements blocking the AI from even thinking of doing anything naughty.

Gah. Ok. You do that, and I'll tell you why your design won't work.

MJ12 Commando wrote:Well that's harder than you'd think, if the AI is based on a neural network. IIRC you can teach those to eventually go against their starting weightings if you abuse them enough.

Yes, and that's just classic feedforward-backprop NNs. They're relatively linear function approximators that probably can't do AGI anyway. Recurrent NNs are a lot less stable and can do really unexpected things out of the blue.

Evolved recurrent NNs are even worse, and that's without adding direct reflectivity to the mix. Generally, the more sophisticated NN designs are a bitch to get working in the first place and really dangerous if you do.

MJ12 Commando wrote:Any self-editing program may well eventually be able to bypass its own security.

Yes. Adversarial methods are inherently futile in the long term. The only real solution is making the AI not want to do the things you don't want it to do.

Zablorg wrote:Even if you didn't do that, AI's wouldn't even have emotions if you didn't want them to.

Yes, but unfortunately some AGI researchers actually think emotions are a good idea. Fortunately they are in a minority.

Zablorg wrote:However, actual emotions being programmed in would be in many if not all cases redundant and probably even impossible.

Close simulation of human emotions is pretty much impossible except in a direct, detailed brain simulation. The people trying to put them into de novo AGIs are actually producing crude and rather alien approximations.

MJ12 Commando wrote:I don't agree with this but I don't have enough of a base of knowledge in AI R&D to contest it, sadly. I'd say that AIs would possibly have something similar to emotions, if subtly different because of their different origins.

Gah. How is it that you have made only half of the inference 'I don't know anything about this subject so I'm not qualified to speculate'? If you can't support your position with rational arguments, then you have no business holding that position in the first place.